The rise of AI assistants. #

What are they ? #

AI assistants have become an integral part of our daily lives, offering a wide range of functionalities from answering questions to automating tasks. These assistants are powered by advanced machine learning models that can process vast amounts of data and provide responses to user queries. AI assistants typically operate by using a combination of natural language processing (NLP) techniques, machine learning algorithms, and large datasets. They are trained on diverse information sources to understand and generate human-like responses. When a user interacts with an AI assistant, the system processes their input, analyzes it for context and intent, and then generates a relevant response based on its training.

The open source claim #

Most of the AI assistant on the market are proprietary software owned by giants (or by rapidly growing ones) like OpenAI, Meta or Anthropic. Even if the companies are advertising their models are open source, the reality is that the models they provide and the data used to train them are not open to the public or free to use. There is also a growing concern about the copyright issues surrounding the training phase of these models. The large datasets gathered from all over the internet often contain all kind of licenced content and do not respect the rights of content creators. This lack of transparency and control over the data used in training can lead to ethical concerns and potential misuse of the technology.

The cost of using AI assistants. #

Even if most of the cloud-based services offer free tiers of usage, the rapid growth of the sector has led to a rise in costs and limited access to premium features, kept behind paywalls. This can be particularly problematic for small businesses or individuals who may not have the budget to afford them. The paywall model can also create a digital divide, where only those with the financial means can access advanced AI capabilities.

What about self-hosting ? #

Self-hosting an AI assistant seems like a perfect answer to the above concerns. You can keep control of your data and if you choose one of the many ‘open source’ models, you can basically run it for free. Even if you choose to run AI services on your local machine, they still require significant computational resources. In theory, this means that you need a powerful computer with a good GPU to handle the processing demands. But you can also run models on CPU only, albeit at a slower rate. All of this can be achieved with a few different tools:

- Ollama to run the models

- Page Assist (or OpenWebUI) to interact with the models from your browser

- Continue for IDE integration

Install and run LLMs with Ollama #

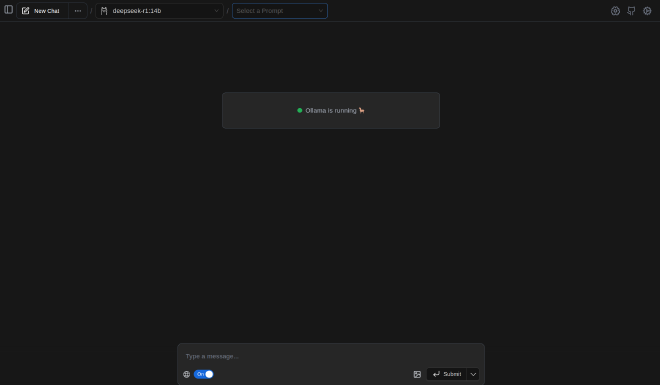

What is Ollama ? #

Ollama is a tool designed to run large language models (LLMs) locally on your computer. It allows you to access a variety of pre-trained models, ranging from versatile general-purpose models to specialized ones for specific domains or tasks. Some of the supported models include LLaMA-2, CodeLLaMA, Falcon, Mistral, WizardCoder, and more. Ollama simplifies the process of downloading and managing these models (its usage resembles docker), offering a user-friendly experience for those who want to use advanced language models directly on their system.

Prerequisites #

As you might know from previous posts, I’m running a Archlinux system on a somewhat powerful PC with a dedicated AMD GPU with enough VRAM to run at least the basic models from Ollama. You should also be aware that all AMD GPUs are not compatible with the rocm framework that allows models to run on GPU. For example, in my case I needed to install specific packages and customize the configuration of Ollama to be able to make models run on GPU. Depending on you personal hardware and system, the following guidelines may differ, and you should always have a look at the documentation.

Install #

From the documentation, you can find how to install Ollama on your system. I decided to go directly to the official package released by my distribution and activate the systemd service to start Ollama automatically at boot time. You probably can do the same on most of them. I also went for the rocm version that allows the use of models on an AMD GPU. Again, depending on you system you may need to follow a different installation process.

Download and run models #

After installing Ollama, you can download and run models. The documentation provides a list of available models that you can choose from. You can also create your own models or customize their properties. For example, to download Llama 3.2 you can run the following command:

ollama pull llama3.2

it will download the model from the official repository and store it locally. You can then run the model using the following command:

ollama run llama3.2

You will be presented with a prompt in which you can input your query. To close the session, hit Ctrl + d. Ollama does not access the internet and all the chat history you have is stored locally. It has many features, you can for example customize the way each models answers to you or create specific prompts but I won’t cover them here, I’ll let you scroll through the documentation.

Now, the basic TUI interface can be enough for some users but in most cases, you probably want your queries to interact with the web (and expose citations) for certain tasks and you may also prefer a nicer interface.

Add a GUI interface to interact with your models with Page Assist #

Page Assist web browser extension #

Page Assist is an open source web browser extension available for chromium and firefox based browsers. It allows you to interact with your local models directly from the web pages you are visiting, providing real-time responses and citations. You can use a full tab interface or a sidebar, you can also use keyboard shortcuts to quickly access it.

In the settings page, you can set up the connection with the local Ollama server and interact with the different features of the extension:

- Model Management: You can easily download, update, or remove models.

- Custom Prompts: Define custom prompts to tailor the behavior of your AI assistant.

- Retrieval-Augmented Generation (RAG): Add knowledge documents to enhance the responses with relevant information.

The sidebar view is particularly useful when interacting with a specific web page. This way you can easily ask your local assistant to summarize the main article on the page, or translate it to another language.

Enabling the web search feature can be very handy when trying to get more context on a topic, or when you need to find specific information.

If you need more, there is OpenWebUI #

There are more powerful options than the simple Page Assist extension. One of the most popular is OpenWebUI. It offers the same features as Page Assist and probably more advanced ones like the ability to use proprietary models like ChatGPT or options for text-to-image generation.

OpenWebUI is also an open-source project, the service can be deployed as a docker container and the web interface is then accessible on your local machine or exposed to the internet if you want to.

I did not try it yet, and it is definitely on my list of things to explore.

What about a coding assistant in your favorite IDE? #

Continue for VSCode and JetBrains IDEs integration #

Obviously, as a tech enthusiast, one of the main benefit of this kind of AI assistant is through coding assistance. Let’s see how we can integrate it into our favorite IDEs.

After a quick web search, I found Continue, an IDE plugin available for VSCode and JetBrains IDEs (like PyCharm, IntelliJ IDEA, WebStorm, etc.). It offers the same kind of features as the integrated options these IDE offer but Continue is able to connect to your local Ollama server and use your local models either to generate code or provide suggestions.

You can associate your Ollama models through the .continue/config.json file settings and these configurations will be the same in all IDEs you have installed Continue on.

This is a great way to keep your coding assistance local, secure, and under your control. As I never used a proprietary coding assistant like GitHub Copilot, I couldn’t tell if the performance of a local model like Qwen2.5-Coder I’m using right now are better or worse. But I can definitely say that its suggestions are often useful and relevant, even if I could live without them. The code generation prompt is not a feature I use much but the code completion feature has already avoided me some annoying bug fixes just by checking the coherence of the syntax.

Disclaimer #

And here is the last point I wanted to make, the continue AI assistant is not only completing code but also docstrings, headers, comments, commit messages, and also markdown articles like the one I am writing now…

So you see me coming, parts of what you read before has been written by AI. Not all of it I assure you, and the experience has not been so great for me. For general contexts sentences, the auto-completion has been at most inspiring but what it produced was often not usable as is. But in more technical parts, it can become a real pain to use. Sometimes, the AI would suggest things that were not relevant or didn’t match the context at all. Then it becomes just a distraction and pollutes what you are trying to write.

So, while I am excited about the potential of AI in software development, I am still cautious about relying too heavily on it. It’s a tool that can be very helpful when used correctly, but it’s important to remember that it’s not perfect and should be used with care.

Conclusion #

Reflecting on my experience with AI tools like Page Assist and Ollama, I approach their use with cautious optimism. These tools can effectively enhance productivity when used wisely. The ability to self-host these models provides crucial benefits, including local control, enhanced privacy and cost-efficiency which I value highly. While they can be helpful, I remain mindful of their limitations and ensure they serve as aids rather than replacements for human decision-making.