Some Definitions #

Let’s start with some context and definitions about what we are going to use.

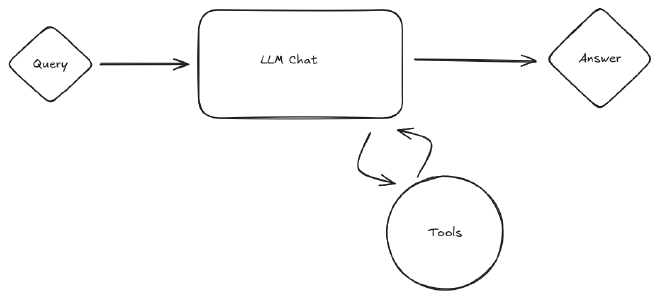

Agentic AI #

Agentic AI refers to intelligent Agents that can perceive their environment, plan actions, and execute them autonomously to achieve high‑level goals. Unlike a simple prompt‑response model, an agent has the ability to plan ahead sub-tasks to perform and call adequate tools to achieve them. Depending on its setup, it can browse the web, analyze data, call APIs, run code, query databases. You can also include a Human-in-the-Loop to control the agent actions.

Roughly, the LLM acts as the brain of the Agent, planning and taking decisions while the tools are its mean to interact with its environment.

Local LLM inference #

Local LLM inference means running a large language model directly on your own hardware (CPU, GPU) instead of sending data to the cloud. The main benefits are:

- No network round‑trips for every query.

- Data privacy – no sensitive text leaves your premises.

- Cost efficiency – you don’t have to pay for each query.

I discussed how to set up Ollama in a previous post. Obviously, the performance is limited by your hardware but for experimenting it is a good choice. Other options would be to use free tiers of inference providers, or paid ones. Here, huggingFace shines again as it provides a simple way to call inference endpoints with various models they propose. You also can connect to you favorite main service like OpenAI or Anthropic. All of it is explained in the tutorials from HuggingFace courses.

Model Context Protocol (MCP) #

The Model Context Protocol (MCP) is a set of guidelines that standardize how external data (e.g., APIs, databases, files) is exposed to an LLM as context. Think of it as a contract that defines:

- Context schema – JSON schema for the data structure.

- Metadata – Provenance, freshness, and privacy tags.

- Access patterns – How to retrieve, cache, and stream the data.

- Security controls – Token scopes and rate limits.

MCP enables plug‑and‑play context for agents: the same agent can query a weather API, a CSV file, or a Neo4j graph, all through a uniform interface. It acts as an accelerator for the development of agents as any set of tools can be implemented independently of the AI models used to power them.

To explain in simple words how it works, let’s say that the tools are exposed by an MCP server, your application or agent is called a host and this host implements an MPC client as one of its functionalities. You can find a more in-depth explanation in this course

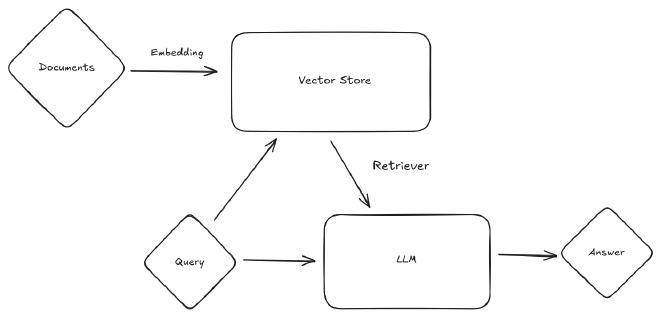

Retrieval Augmented Generation (RAG) #

Retrieval Augmented Generation is a technique that augments the LLM’s answer with external knowledge fetched in real time. The typical pipeline is:

- Query formulation – The agent generates a search query or a prompt.

- Retrieval – The system looks up the best relevant passages from a vector store, knowledge graph, or web search.

- Fusion – The retrieved snippets are concatenated with the prompt.

- Generation – The LLM produces the final answer.

RAG is especially useful when dealing with highly specialized domains or rapidly changing data that the trained LLM would not have seen.

A quick word about Agentic RAG here. In the traditional RAG implementation, the tool for retrieval is called first, and it’s answer is then passed as additional context to the LLM along with the initial use query. In agentic RAG, the workflow is more flexible because the agent will decide whether to use the retriever tool. Another big advantage is the query reformulation that the agent performs. In traditional RAG, the initial user query, often time a complete question, is generally passed as-is to the retriever, which is not optimal. In agentic RAG, the agent LLM reformulates the query before passing it to the retriever improving the results.

Vector Stores #

Vector Stores are a foundation of RAG implementation. Their role is it turn raw knowledge into searchable embeddings, enabling RAG systems to use specific information without having to load entire documents into memory.

In general, the input document are split into smaller chunks to turns a monolithic document into a collection of searchable, semantically coherent units that fit within the LLM’s context window and that can be ranked accurately

Knowledge Graphs #

A knowledge graph is a graph‑structured representation of data with nodes and edges representing entities and their relationships. Stored in a graph database like Neo4J, a knowledge graph supports efficient traversal, semantic search, and query languages enabling agents to answer complex questions, discover hidden connections, and supply structured evidence.

Where to start, where to learn ? #

HuggingFace Learn #

When looking for good tutorials to begin with, I came upon the HuggingFace hub Learn section. You can find there complete lessons and tutorials on LLM usage and training, Agentic frameworks like SmolAgent and Langchain, and even an MCP course built just a few months ago as when I’m writing this. I found these courses complete and well-written with hands-on exercises that you can run either using their inference options (beware the costs) or locally with your Ollama instance.

That being said, you can use whatever material you prefer. I often find YouTube videos to be of great use because they showcase simple use cases that you can duplicate.

DIY is always a good choice #

Nothing compares to practice when trying to learn and understand how things work. You could spend hours reading courses materials and videos online but implementing them yourself, even simple version designed for dummy use cases will give you a much better understanding. I decided to give a try at building my own agent using Langchain and Ollama.

My Experimental Agent step by step #

Agentic Framework #

Fortunately, we don’t have to reinvent the wheel. There are multiple open source AI Agent frameworks available, all with their pros and cons. I tested out two of them, smolagents from Huggingface and Langchain/Langgraph.

The first one focuses mainly on Code Agents, which are designed to be able to generate and execute code as they advance through their answering steps. It makes them really powerful but more unpredictable.

On the other side of the spectrum, there is Langgraph. Its graph based approach allows for more control over the agent workflow, but it requires more setup and understanding. It is also an established framework among professionals, the control features being most relevant in business and enterprise use cases.

I decided to go with Langgraph as I thought to get more value out of my training, but ultimately I used both as you will see later. Anyway, if you’re like me trying to experiment, I’ll recommend to try both !

In langgraph, you have to define a state, nodes and edges to build the graph of your agent. The state is the content of your application that is passed through the graph nodes via the edges. For my use case, the state is the complete history of messages built by the different nodes. It consists in a series of Human, AI and Tool messages.

class AgentState(TypedDict):

messages: Annotated[list[AnyMessage], add_messages]

For the first implementation, I’m using two nodes:

- Assistant node - The LLM brain of the agent, it is defined as the start of the flow.

- Tool node - the toolbox containing all the tools and their metadata.

The only specific edge is the link between assistant and tool nodes. It is a conditional relation activate by the LLM.

builder = StateGraph(AgentState)

memory = InMemorySaver()

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

builder.add_edge(START, "assistant")

builder.add_conditional_edges(

"assistant",

tools_condition,

)

builder.add_edge("tools", "assistant")

agent = builder.compile(checkpointer=memory)

In this sample code, I also added a memory checkpointer to allow the LLM to remember interactions, like you would expect in a chat application. It also allows to follow up queries with additional information.

MCP Tools integration #

After their release late 2024, MCP have been the center of the AI world. I figured that while I was experimenting with LLMs tools and agents, I’d take a look at MCP servers and clients along the way. Obviously, for the little experiment I’m building, I didn’t need any of this more complex setup.

Tools are the mean of interaction for the agent. For a tool to be efficient, it needs to have a precise documentation and typed inputs. In the python version of the MCP server I used, all this is done trough typing and docstrings. Apart from that the MCP implementation is quite simple. Look at this math MCP server:

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Math")

@mcp.tool()

def multiply(a: float, b: float) -> float:

"""

Multiplies two numbers.

Args:

a (float): the first number

b (float): the second number

"""

return a * b

@mcp.tool()

def add(a: float, b: float) -> float:

"""

Adds two numbers.

Args:

a (float): the first number

b (float): the second number

"""

return a + b

if __name__ == "__main__":

mcp.run(transport="stdio")

You can define as many MCP servers as you need, I created a math one, one for fetching the weather, one for searching the web, … One of the advantages of this MCP setup is the re-usability of these servers. You implement a tool once, and then you can use them in as many agents or applications you want.

You can also use publicly available MCP servers from the web, like the ones from GitHub or Google to be able to interact with their services. It is a formidable way to build quickly well-integrated toolboxes but the downside is the potential transit of data to remote servers.

To connect you agent LLM node to the different MCP servers, I used the provided MultiServerMCPClient from langchain_mcp_adapters and bind

tools methods.

Adding Data Analysis Capabilities with Code Agent #

Until now, everything we did was based on simple tools with no real added value. Add the ability to browse the web with DuckDuckGoSearchRun from langchain

is certainly useful to overcome to lack of up-to-date data of the LLMs, but you can have this now in any GUI interface like

PageAssist or OpenWebUI. What can I add to my agent that could be really useful to me ?

I’m a Data Scientist, and as such, most of my time is spent analyzing data. It means, exploring, computing statistics and metrics and visualizing them. It is a repetitive task but not so simple to automate because for each use case, the exploration will be different. What if I could ask an agent to explore a dataset ? It would mean load let’s say a CSV file, compute some statistics, draw charts and reflect on the outputs.

Now, I said earlier that the Learn section from HuggingFace was an excellent starting point for the journey. It’s even better, it’s a gold mine ! The Open-Source AI Cookbook contains a lot of recipes that can be adapted to your needs. It’s also a lot of inspiration on the possible applications of LLMs and Agents. I found implementations of an Analytics Assistant and a Knowledge Graph RAG.

I decided to follow the implementation of the Data Analysis Agent using a smolagent CodeAgent and expose its analysis capabilities as a tool to my manager agent. I am not sure that this architecture is a recommended one, but it will do for the time being. Code Agents as I mentioned have the ability to execute code snippets while progressing through their tasks. I think of it as an agent that can build his own tools, at least simple ones. Let’s have a look at my version:

from mcp.server.fastmcp import FastMCP

from smolagents import CodeAgent, LiteLLMModel

mcp = FastMCP("Data Analysis")

model = LiteLLMModel(

model_id="ollama_chat/your_model_name",

api_base=OLLAMA_HOST,

num_ctx=8192,

)

agent = CodeAgent(

tools=[],

model=model,

additional_authorized_imports=[

"numpy", "pandas", "matplotlib.pyplot", "seaborn"],

)

@mcp.tool()

def run_analysis(additional_notes: str, source_file: str) -> str:

"""Analyses the content of a given csv file.

Args:

additional_notes (str): notes to guide the analysis

source_file (str): path to local source file

"""

prompt = f"""You are an expert data analyst.

Please load the source file and analyze its content.

The first analysis to perform is a generic content exploration,

with simple statistics, null values, outliers, and types

of each columns.

Secondly, according to the variables you have, list 3

interesting questions that could be asked on this data,

for instance about specific correlations.

Then answer these questions one by one, by finding the

relevant numbers. Meanwhile, plot some figures using

matplotlib/seaborn and save them to the (already existing)

folder './figures/': take care to clear each figure

with plt.clf() before doing another plot.

In your final answer: summarize the initial analysis and

these correlations and trends. After each number derive

real worlds insights. Your final answer should have at

least 3 numbered and detailed parts.

- Here are additional notes and query to guide

your analysis: {additional_notes}.

- Here is the file path: {source_file}.

"""

return agent.run(prompt)

if __name__ == "__main__":

mcp.run(transport="stdio")

As you can see, the tool is just a prompt where you ask the agent to analyze the given csv file ! The first few tests I have made with this version are quite impressive already ! It created visualizations, metrics and reflected to get insights in its final answer. This first version could be improved with dedicated tools like plots and given metrics to increase the control on what the agent is going to achieve when we call it.

Using Knowledge Graphs to enhance RAG #

I gave a shot at Vector Stores and traditional RAG a few months back when first hearing about the technique. The idea is to improve the quality of answers from an LLM using specific data, either more recent data or dedicated to a certain domain for example. The typical example for me is a coding assistant capable of searching through the documentation of a specific language or package. Another technique to achieve this would be finetuning but in the case of LLMs, the constraints are quite hard making RAG a good alternative.

More recently, Knowledge Graphs (KG) have been introduced as a way to improve the LLM answers in the case of semantic searches by adding contextual understanding of the data. It also gives a way to better explain the reasoning made by the LLM.

The recipe from HuggingFace is using Neo4J as the graph database. I am using the docker version of Neo4J to host my sample database but there is free plan for hosting on Neo4J AuraDB I’m using the proposed dataset as a base for the sake of the experiment. A graph containing Articles, Authors and Topics nodes with edges building the relation between them: published by and in topic. It is representative of a research AI assistant, with for example a database derived from Arxiv.

First load the Neo4J graph:

from langchain_community.graphs import Neo4jGraph

graph = Neo4jGraph(

url=os.environ["NEO4J_URI"],

username=os.environ["NEO4J_USERNAME"],

password=os.environ["NEO4J_PASSWORD"],

)

In the case of a graph database, langchain provides a GraphCypherQAChain that allows us to query our graph database using natural language.

Like in the case of the Data Analytics Assistant, the queries are handled by a dedicated agent, here from langgraph, with its own set of tools and instructions.

cypher_chain = GraphCypherQAChain.from_llm(

cypher_llm=ChatOllama(model = "a_local_model", temperature=0.),

qa_llm=ChatOllama(model = "a_local_model", temperature=0.),

graph=graph,

verbose=True,

allow_dangerous_requests=True, # should add control in real world

)

def graph_retriever(query: str) -> str:

return cypher_chain.invoke({"query": query})

graph_retriever_tool = Tool(

name="graph_retriever_tool",

func=graph_retriever,

description="""Retrieves detailed information about

articles, authors and topics from graph database.

"""

)

I decided to bind this tool to a dedicated agent and build a multi-agent system mostly for experimentation purposes.

But the graph_retriever_tool can be used as a standalone tool for the manager agent, or even exposed through MCP as I did

in the case of the data analytics.

I performed the tests suggested in the recipe. They are requests forcing the system to build complex cypher queries to traverse the graph surch as

Are there any pair of researchers who have published more than three articles together?

and found the right answers ! The system was able to generate a complex query to answer and generate a coherent final response.

Gradio for chat interaction #

The only missing piece to the puzzle is a way to interact with the system. That when Gradio comes into place.

It is an open-source Python package that allows to quickly build a demo or web application for AI models. I used the built-in

ChatInterface to create a simple chat webpage hosted locally to interact with the agent.

Conclusion #

You can find the complete source code for this example on my Gitlab. Keep in mind that everything I presented here is evolving rapidly, is subject to change, and certainly can be improved ! If you have any questions or suggestions, feel free to reach out !